What’s New in PICMG COM-HPC Specification 1.2

February 01, 2024

Blog

Christian Eder, Chair of the COM-HPC working group and Director of Market Intelligence at congatec, shares the key innovations in the high-performance Computer-on-Module standard.

The COM-HPC specification has been around for two and a half years. How has demand developed during this time?

COM-HPC is designed for high-performance Computer-on-Modules that can be deployed both as modular servers and modular clients. There is great demand for such modules due to the growing requirements of digitization and IIoT, the use of artificial intelligence, vision-based situational awareness, and spiraling data processing needs. Developers working on new designs in these application fields have no doubt that COM-HPC modules are the right solution. Almost every second new project within the entire performance range of PICMG standards, which include COM Express and COM-HPC, uses COM-HPC, and first OEM solutions with 12th and 13th generation Intel Core processors and Intel Xeon D are already in series production. So, the launch of COM-HPC was extremely smooth, and acceptance high right from the start. The situation was very different when the COM Express specification was launched. We had to do a lot more convincing at the time. It is clearly a huge advantage to involve manufacturer-independent bodies such as the PICMG in the specification of new form factors.

What can customers expect from the new revision?

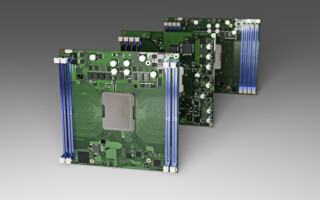

There are a few minor specification improvements that make the standard even more universally applicable and improve the ruggedness of designs. However, the most important innovation is the addition of a new ultra-compact modular footprint: COM-HPC Mini. This new specification enables high-performance features in a particularly small form factor measuring just 95 mm x 70 mm. Even devices with limited space can now benefit from the enormous 400-pin interface and superior bandwidth offering. This includes Thunderbolt and PCIe Gen 5 as well as Gen 6 in the future once the corresponding processors become available.

COM-HPC is thus establishing itself as the most scalable Computer-on-Module (CoM) standard that covers a wide range of applications, from small form factor to edge server designs. This simplifies the design-in process and makes it easier to develop complete product families. As COM-HPC modules not only support specific processors such as x86 or Arm, but also FPGAs, ASICs and AI accelerators, they provide a comprehensive standard for the development of innovative applications based on the latest embedded and edge computing technologies.

Why is the Mini specification so important?

Well, on the one hand, embedded always means limited space, and even small devices need enormous bandwidths for AI and situational awareness. On the other hand, the 95 mm x 70 mm footprint is the perfect fit for migration from COM Express to COM-HPC. In terms of space requirements, COM-HPC Mini modules fit into any design that was developed based on COM Express Basic (95 mm x 120 mm) or Compact (95 mm x 95 mm). This makes migration extremely easy, which is why we expect a great deal from this form factor in the long term.

COM-HPC Mini is the most important addition in Rev 1.2, bringing high-end embedded client performance to extremely space-constrained stationary and mobile devices.

But COM-HPC Mini has 10% less pins than COM Express Type 6. Doesn't that limit capabilities?

No. COM-HPC Mini targets small and mobile devices, not stationary, often highly complex systems with countless interfaces. Developers who have fully exploited COM Express Type 6 are primarily users of COM Express Basic. They are migrating towards COM-HPC Size A. After all, they do not want to lose the option of offering significantly more interfaces. At 95 mm x 120 mm, this form factor is also marginally smaller than the 95 mm x 125 mm of COM Express Basic and offers an extremely high interface variety and density. Alone the fact that it features 800 instead of 440 pins provides more interfaces and bandwidth. What’s more, fast interfaces such as PCI Express or Ethernet are even faster on COM-HPC. Theoretically, the possible interface performance increase from COM Express Type 7 (Rev. 3.0) to COM-HPC Server is around a factor of 15. From COM Express (Rev. 3.0) to COM‑HPC Client, I/O performance can even increase by a factor of 17.

Apart from the form factor and the 400-pin connector, are there any other differences between COM‑HPC Mini and the other COM-HPC form factors?

Yes, the pin assignment is different. In some cases, it includes multiple options to enable the implementation of as many configurations as possible with the 400 pins to offer maximum flexibility on the smallest footprint. The voltage assignment of the sideband signals has also been adapted to reduce energy requirements and, even more importantly, to support low-power processors, which increasingly operate at 1.8 Volt. This simplifies both module and carrier board designs because fewer level converters are needed compared to retaining the identical specification of the larger COM-HPC form factors. So, it is not possible to fit COM-HPC Mini modules on a carrier board designed for size A because the two form factors are incompatible, both electrically and in terms of interface assignment. The heatspreader is also flatter to enable lower designs. Furthermore, MIPI-CSI support is not implemented via the 400-pin connector, but via two additional 22-pin flat foil connectors. So, in effect, the COM-HPC Mini specification has 444 pins. This design approach with additional connectors was adopted in analogy to SMARC and COM Express, where it has already proven itself. When compared to other mini form factors, there’s also another figure that underlines why COM-HPC Mini marks the absolute high-end: besides the higher pin count, support for up to 107 Watt leaves significantly more power reserves than the 15 Watt typically possible with SMARC.

The new Mini form factor completes the COM-HPC specification, making it the most widely scalable COM standard on the market.

Could you explain multiplexing? Doesn’t it lead to arbitrary configurations, which can result in incompatibilities?

Compared to COM-HPC Client with its higher pin count, the Mini specification provides 8 high-speed data channels for the fast USB 3.x / USB 4 data lines and for the digital display interfaces (DDIs). However, not all data channels can be used for any purpose. We have therefore predefined five flexible options for scaling the 8 SuperSpeed lanes between DDI and USB assignments. Next to 2x DDI and 4x USB3 on one side and 4x USB4 on the other, variants with 1x DDI, 1x USB4 and 4x USB3, as well as 1xDDI, 2x USB4 and 2 USB3, and 3x USB4 and 2x USB2 are also possible. However, these are the only permitted assignments, which offers planning security. The same applies to the assignment of PCIe, Ethernet and SATA. Arm developers have been familiar with the SERDES principle introduced with COM-HPC for some time, and it has proved its worth. However, to understand the potential of each individual module, it is necessary to consider the significantly greater number of combinations. To a certain extent, SATA is also a concession to current legacy designs. However, this option is hardly used any more as the numerous PCIe interfaces also enable fast NVME flash mass storage.

COM-HPC Mini has various multiplexed pin assignments such as SERDES for PCIe, GbE and SATA. 8 SuperSpeed lanes can also have different DVI and USB interfaces.

Let’s look at the COM-HPC Server portfolio. What is emerging here?

When Intel Xeon D processors, codenamed Ice Lake D, were launched, it was interesting to observe that OEMs don’t seem to need the full memory bandwidth of these processors. This means they can rely on Size D, which offers only 4 instead of 8 slots for RAM. The reason behind this phenomenon is that mixed critical edge server applications don’t have to manage RAM-intensive server workloads. Rather, they must host multiple real-time applications in parallel and therefore require as many cores as possible. They must also meet the requirements of industrial communication with many small message packets that need processing in real time. Here too, memory space is not as crucial as with database-supported web servers that are used by thousands of people simultaneously.

When deploying COM-HPC Server modules, embedded OEMs currently still tend to utilize size D with 4 RAM slots. This is because typically, there is a need to process many small data packets in real time at the edge. However, solutions with 8 sockets are available for greater memory requirements.

Let’s move on to the new features that apply to all form factors in the specification. What are the most significant changes here?

There are two things to mention: First, the connector has been optimized to increase ruggedness even further. Second, COM-HPC is now fully qualified for PCIe Gen 6.

Does the new connector also affect the carrier board?

Yes, two small holes are required to increase ruggedness. And the metal reinforcements on the sides of the plug are soldered for greater mechanical stability. Other than that, nothing changes. The new reinforced connector can only be used if the second hole is also present. However, both the old and the new connector can be implemented using the same SMT soldering process. In this respect, only a minor change to the carrier board design is required to comply with Rev. 1.2. While I anticipate that the previous connector will continue to be available, it makes sense to neglect it for new carrier board designs. The changeover is unproblematic - the old and new connectors can be used in all combinations. Notably, the new connector will be available from at least three manufacturers. In addition to Samtec, Amphenol and All Best are now also manufacturing this new variant under license, which enables second source strategies.

COM-HPC 1.2 specifies a new connector for all COM-HPC sizes. Its pin increases the stability of the connection between board and connector

What changes had to be made for PCIe Gen 6 designs? Are there already processors that support this bandwidth, and who needs this performance?

In general, it is already possible to design carrier boards for Gen 6 even though there are no Gen 6-supporting processors yet. However, they will become available in the not too distant future. To integrate them, the carrier board must be prepared for PAM4 instead of PAM2 modulation. In addition to the values 0 and 1, this uses two intermediate stages - with 2 instead of 1 bit per transmission. As this upgrade does not change the transmitted frequencies, hardly any new signal integrity challenges are anticipated. However, careful design is still important as Gen 6 requires even greater attention to signal noise than Gen 5. The higher bandwidth of 8 GB/s per lane is required for graphics, for example. This enables up to 128 GB/s via 16 lanes, making it possible to transmit ever higher resolution frames at faster frequencies with greater color depth. 100 GbE is already standard in many areas and this bandwidth must also be passed through to the processor. USB is also getting faster and faster.

Are rugged embedded designs with such performance increases even possible?

Fortunately, we have positive developments in the manufacturing processes towards 7nm and smaller, as well as in 3D technology. These make it possible to double the performance with the same TDP. We will soon be launching new processors with exactly this capability. We can therefore increase performance further while staying within passively coolable performance limits. This is also the only way to develop embedded systems that meet increasingly high sustainability requirements, including climate neutrality.