Sub-threshold circuitry: Making Moore's about power, not performance

August 12, 2016

As silicon geometries approach the edge of physics, a new rule of thumb is poised to govern the computing industry: "Thou shalt reduce power consumpti...

As silicon geometries approach the edge of physics, a new rule of thumb is poised to govern the computing industry: “Thou shalt reduce power consumption by 50 percent every two years.” How could that be possible? Sub-threshold voltage circuitry.

The ULPBench is a standardized benchmark developed by the Embedded Microprocessor Benchmark Consortium (EEMBC) for measuring the energy efficiency of ultra-low power (ULP) embedded microcontrollers (MCUs). The benchmark ports a normalized set of MCU workloads to a target, such as memory and math operations, sorting, and GPIO interaction. These workloads form the basis for analyzing the active and low-power conditions of 8-, 16-, or 32-bit MCUs, including active current, sleep current, core efficiency, cache efficiency, and wake-up time. The results are then calculated using a reciprocal formula (1000/Median of 5 times average energy per second for 10 ULPBench cycles), yielding a score based on the amount of energy consumed during workload operation – the ULPBench.

In November 2015, Ambiq Micro (www.ambiqmicro.com), a semiconductor vendor out of Austin, TX, submitted its Apollo MCU for testing against the ULPBench, scoring 377.50 (the reciprocal formula means the higher the benchmark score, the better), more than twice that of the previous bellwether, STMicroelectronics’ STM32L476RG. Depending on the direction of an application, this 2x power savings can be repurposed to extend battery life or add new features (Sidebar 1). According to Scott Hanson, Ph.D., Founder and CTO of Ambiq Micro, advances in energy efficiency such as those being realized today could lead to a new iteration of Moore’s law in which the power consumption of embedded microprocessors is cut in half every couple of years.

“What we’re seeing is every one of our customers wants to one-up their product from last year,” Hanson says. “If they want to add some great new feature – maybe last year it was heart rate monitoring and this year a microphone to do some always-on voice processing – that all takes CPU cycles. Today a lot of these companies are only running effectively at 1 million instructions per second (MIPS) or so, so very few cycles per second. Maybe they want to make a leap to 10 MIPS and, suddenly, MCU power goes from being a 25 percent contributor to a 75 percent contributor. That’s a problem.

“Much in the same way that Moore’s law was about adding more transistors in the same area, we have to be very focused on driving energy down 2x or 4x every single year,” he adds.

Sidebar 1 | Wearable energy efficiency beyond the battery

It’s true: charging a Fitbit couldn’t be simpler. Still, for a wearable device, especially one that requires 24-hour wear to take full advantage of its capabilities, charging even once a week feels like a chore. When the battery drains, it’s like your carriage turned back into a pumpkin. What was once the leading authority on your personal fitness level is now just an ugly, slightly uncomfortable bracelet. It’s so disenchanting that every time you put it on the charger, it might not make it back onto your wrist.

This is exactly the problem that Misfit wanted to remedy. Their goal? Produce a device that never needs to be removed. The result? Their original tracker, the Misfit Shine, boasted a six-month battery life; most activity trackers need to be charged at least once a week.

But while the Shine outpaced most other trackers in battery life, many users found the functionality to be too limited. The Shine was equipped with a Silicon Labs EFM32 MCU, Bluetooth Low Energy (BLE), and a 3-axis accelerometer. That put the Shine about on par with Fitbit’s most basic offering, the Fitbit Zip, which, while not intended to track sleep, offers similar battery life and a more useful display. The next-generation Shine would need to add functionality without backpedaling on their commitment to long-term wear.

Enter Ambiq Micro’s Apollo MCU. The Apollo in the Misfit Shine 2, twice as powerful as the EFM32 MCU in the original Shine, allowed for the addition of a vibration motor for call and text notifications; multicolored LEDs and a capacitive touch sensor for a more clear, interactive user interface; and a magnetometer to improve the accuracy of activity tracking. Thanks to Ambiq’s SPOT platform, the Apollo also boasts industry-leading energy efficiency, mitigating tradeoffs in power consumption brought on by the added functionality to retain the six-month battery life of the Shine 2’s predecessor.

[Figure 1 | The Misfit Shine 2 has a six-month battery life. Similar activity trackers, like Fitbit’s newest and most advanced “everyday” offering the Fitbit Alta, require a charge about every five days.]

But while the Apollo offers unparalleled power consumption compared to similar MCUs, the processor isn’t the only place where battery life can be extended. Other components, such as sensors and wireless chips, could also leverage sub-threshold circuitry such as that used on the Apollo MCU to reduce power, and software can be optimized to further increase energy efficiency.

The way Ambiq’s Chief Technology Officer Scott Hanson sees it, “We’re constantly going to be talking about how we need to be lower energy and the batteries need to be better. Every component needs to be more efficient than it is today. We’re always going to be under that pressure.”

Sub-threshold voltage circuitry demystified

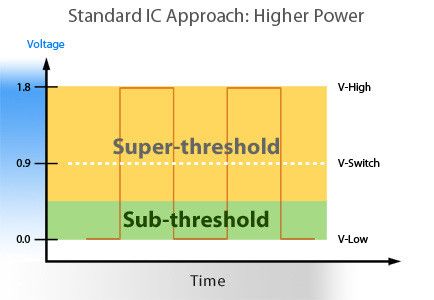

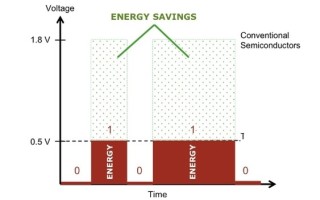

What enables the Apollo MCU to achieve such notable ULP performance metrics is the use of sub-threshold circuitry, which operates on supply voltages below the threshold voltage of typical 1.8V or 3.3V MCUs. Threshold voltage represents the minimum gate-to-source voltage required to change a transistor’s state from “off” to “on” or drive a signal “low” or “high” for logic purposes. In a standard 1.8V integrated circuit (IC), significant current can be required to perform these state changes, which directly correlates with power consumption as dynamic energy – the energy associated with turning transistors on or off – is calculated by squaring the operating voltage (Figures 1 and 2).

[Figure 1 | A typical 1.8V IC requires a significant amount of current to achieve a state change.]

[Figure 2 | Dynamic power consumption, or the energy required to switch a transistor on or off, is responsible for the majority of the energy used by ICs, particularly at higher operating voltages.]

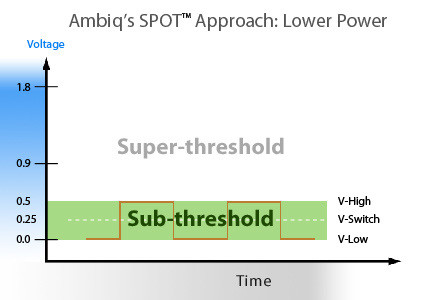

Ambiq, however, uses their Sub-threshold Power Optimized Technology, or SPOT, to operate transistors at voltages of less than 0.5V (sub-threshold), which provides a couple of benefits (Figure 3). First, state switching at these lower operating voltages makes for lower dynamic energy consumption; Second, the leakage current (see Figure 2) of “off” transistors can be harnessed to perform most computations, in essence recapturing previously lost power. In the case of Ambiq’s 32-bit ARM Cortex-M4F-based Apollo MCUs running at up to 24 MHz, the result is a platform that consumes 34 µA/MHz executing from flash and sleep currents as low as 140 nA, both of which are lower than competitive Cortex-M0+ offerings, the company says.

[Figure 3 | Ambiq’s SPOT platform operates transistors at sub-threshold voltages of less than 0.5V to achieve significant energy savings compared to standard IC implementations.]

“What we effectively do is we take a normal microprocessor, including both the analog elements and the digital elements, and run them at much lower voltage,” Hanson explains. “On the digital side we dial down the voltage very low, anywhere between 200 mV and 600 mV, depending on the type of device you’re using. That requires a system-wide change in how you design the chip, from the standard cells to how you do simulations to how you do time enclosure to how you do voltage regulation – all of that has to be modified specifically to run at lower voltage. And then on the analog side we run at extremely low gate-to-source voltages, so we’ll use tail currents in amplifiers that are as low as a few picoamps.”

Sub-threshold circuitry is not without its challenges, however. While it can deliver exponential gains in energy efficiency, such low-voltage operation precludes processor speeds above a couple hundred MHz (for now) and also makes for circuits that are inherently more sensitive to fluctuations in temperature and voltage (Figure 4).

“This obviously comes with its share of problems,” Hanson says. “We’re very sensitive to temperature fluctuations and voltage fluctuations and process fluctuations, but we have a pretty wide range of techniques that we use to address that, for instance with proprietary analog circuit building blocks. Every analog circuit that you can read about in a textbook is based on saturated transistors and bipolar transistors, not sub-threshold-based MOSFETS, so we have had to reinvent a lot of the underlying analog circuit building blocks such that they work at extremely low sub-threshold currents.

[Figure 4 | Besides exponential current fluctuations in response to changes in operating voltages at sub-threshold levels, slight temperature variations can lead to radical current deltas as well. This mandates significant compensation in sub-threshold circuitry.]

“Internally, we’re doing a lot of voltage conversion to get voltage down, we’re managing all the process variations, voltage variations, and temperature variations, and the result is dramatically lower energy,” Hanson continues. “There’s not any one silver bullet. There’s a wide range of things that we do to make sub-threshold possible.”

More than Moore’s

Advances in sub-threshold circuitry and voltage optimization will become more prevalent as Moore’s law continues to eke out smaller process nodes and the gate-to-source channels of MOSFETs shrink in size, necessitating lower and lower supply voltages as well as increasingly smaller thresholds. As Moore’s law advances towards the limits of physics, power consumption will become as much of a tenet of computing’s first amendment as performance, if not replace it.

“One of the challenges we see for Ambiq at a system level is that, as we’ve driven energy efficiency of the processor down, the overall contribution of the microprocessor to the system has gone down to the point where customers say, ‘Hey, you knock another 10x out of the energy, it doesn’t make a difference because you’re already very, very low,’” says Hanson. “What they really need is for us to knock that energy down by 10x, but they need a commensurate increase in performance to take advantage of that. That is to say, if I reduce energy by 10x, they want to see an accompanying 10x increase in performance so they can stay in the same power envelope but dramatically increase the processing power. We focus a lot on that as a company: How can I both increase performance and continue to reduce energy?

“We saw Moore’s law in the high-performance computing industry with PCs, notebooks, and phones. We saw Moore’s law lead the way in allowing us to deliver something better and more incredible every year so we could add more features, but we were kind of stuck in the same form factors, the same battery life, etc.,” Hanson continues. “We’re going to see the same thing happen with power consumption, and that means that we’re constantly going to be talking about how we need lower energy in every component.”