A source-annotation-based framework for structural coverage analysis tool testing

January 19, 2017

Automated testing of software tools always requires some way of comparing what the tool does against what we expect it to do. Testing compilers, for example, usually entails verifying the...

Automated testing of software tools always requires some way of comparing what the tool does against what we expect it to do. Testing compilers, for example, usually entails verifying the behavior of compiled programs, checking compilation error messages, or analyzing the generated machine code. For static or dynamic analysis tools, this typically involves checking the tool outputs for well-defined sets of inputs.

The following presents a framework developed for in-house testing of a structural coverage analysis tool, where expected coverage results are expressed as annotations in source comments. This framework was used to qualify the tool for use in several safety-critical software projects with stringent certification constraints in the avionics domain.

We first summarize the coverage criteria and tool output formats that need to be supported, then introduce the main principles of our scheme for describing the tool’s expected results and explain the advantages over performing comparisons against baseline outputs.

Coverage criteria and output report formats

The code coverage analysis tool we need to test supports the three coverage criteria defined by the DO-178C certification standard for airborne software [1]: Statement Coverage, Decision Coverage, and Modified Condition/Decision Coverage, commonly referred to as MC/DC[2]. It produces two kinds of output report formats:

- Annotated sources, generated from the sources to analyze, with lines prefixed by a line number and a coverage result indication;

- A text report, listing violations against the coverage criteria for which an assessment was required.

The excerpt in Figure 1 shows an example piece of an annotated source result for a Decision Coverage assessment performed by the tool on an Ada function that was called only once, with a value of X greater than the Max parameter.

[Figure 1 | Shown here is an example annotated source result for a Decision Coverage assessment performed by the structural coverage analysis tool on an Ada function.]

[Figure 1 | Shown here is an example annotated source result for a Decision Coverage assessment performed by the structural coverage analysis tool on an Ada function.]

The information at the start of each line is the tool’s output showing the coverage results. The “– #” texts are special comments within the original source (comments start with “–” in Ada) recognized by the framework as introducing tags that allow users to denote the lines in expectations of the coverage results, which will be described later on.

In DO-178C parlance, Boolean expressions such as the one controlling the if-statement are called decisions, and achieving Decision Coverage requires tests evaluating each decision True least once and False at least once in addition to executing each source statement.

In the example at hand, the decision controlling the if-statement was only evaluated once to the Boolean value False for X > Max. The decision is thus only partially covered and the return statement on line 4 is never executed. This is conveyed by the “!” and “-” characters next to the line numbers on lines 3 and 4, together with the “+” annotation on line 6 indicating proper coverage of the return statement there.

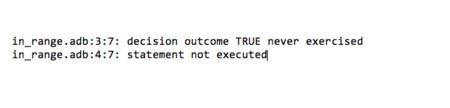

The alternate kind of output format for this assessment, a text report listing coverage violations with respect to the criteria, would include messages such as those in Figure 2, where the first part is a filename:line-number:column-number source location consistent with the annotated source result.

[Figure 2 | Messages generated in a text report of the Decision Coverage assessment performed by the structural coverage analysis tool on an Ada function are shown here.]

Stating basic expected coverage results

In the previous section what the actual coverage results look like for the tool were illustrated. How test-writing personnel specify the expected results for a given test scenario will be described next.

The main goal of the tool is to let testers state expectations efficiently using the source code being tested while abstracting away the report format details. It also encourages active thinking about what the expected results for a test should be.

The tool defines a test as a combination of three categories of source files:

- Functional sources, which is the code whose coverage the tester wants to assess and check if the results conform to the coverage tool requirements;

- Driver sources, which call into the functional code in a specific manner with precise coverage objectives;

- and helper sources, which are simply needed for completeness and do not require coverage analysis.

Expected coverage results are then stated as specially formatted comments in the driver sources, referring to lines in the functional sources also tagged by specially formatted comments.

The In_Range example function presented previously shows instances of special comments introducing tags. The “expr_eval” tag, for example, allows denoting the line where the decision expression gets evaluated. A given tag may appear on several lines.

The special comments describing expected coverage results in driver sources are sequences of comments as shown in Figure 3, where “xp” stands for “expected”. The first line marks the start of expected results for the functional source named functional-source-filename. The /tag1/ line states expectations for all the source lines tagged with tag1 in this source. xp-source-line-note conveys the coverage indication character expected in the annotated source output format for these lines (same annotation for all the lines), and xp-violation-notes conveys the set of violation messages expected in the text report format for these lines (same set for all the lines).

[Figure 3 | The special comments describing expected coverage results in driver sources for the structural coverage analysis assessment performed on the example Ada function are shown here.]

[Figure 3 | The special comments describing expected coverage results in driver sources for the structural coverage analysis assessment performed on the example Ada function are shown here.]

A driver source may contain several /tag/ lines for a given functional source and expectations for several functional sources.

On /tag/ lines, short identifiers may be used to denote the various possible coverage indications in a compact fashion. “l+”, “l-“ or “l!”, for example, are available for xp-source-line-note to denote an expected “+”, “-”, or “!” coverage indication on the annotated source lines, respectively. For xp-violation-notes, we have, for example, among all the possibilities, “s-” to denote an expected “statement never executed” violation, or “dF-“ for a “decision outcome False never exercised” violation.

Figure 4 shows a sketch of driver source to illustrate a Decision Coverage test over the source file providing the In_Range function, named in_range.adb. This driver implements the execution scenario previously used to illustrate the output report formats, calling In_Range function once with X > Max:

[Figure 4 | This sketch of driver source illustrates a Decision Coverage test over the source file providing the In_Range function (in_range.adb), where the function is called once using X > Max as in the previous assessment example.]

[Figure 4 | This sketch of driver source illustrates a Decision Coverage test over the source file providing the In_Range function (in_range.adb), where the function is called once using X > Max as in the previous assessment example.]

The /expr_eval/ line states expected coverage results for the set of lines tagged “expr_eval” within in_range.adb. In the example, this is the single line where the decision gets evaluated (only once to False by this specific driver), so a partial coverage indication on the annotated line (l!) and a “decision outcome True not exercised” violation diagnostic in the text report (dT-) should be expected.

The /expr_true/ and /expr_false/ lines state expected coverage results for the source lines tagged “expr_true” and “expr_false”, with those tags chosen to denote lines with statements executed when the decision evaluates to True or False. The “0” used as xp-violations-notes for “expr_false” denotes an empty set, meaning that no violation for these lines in the text report is expected. This is consistent with the expectation of a ‘+’ in the annotated source format (l+ as the xp-source-line-note), corresponding to full coverage of all the items on the lines (in the example, a single return statement on a single line) as enforced by the execution scenario.

These expectations correspond exactly to the actual results shown in the initial example; this test would “pass” using the structural coverage analysis tool testing framework.

Advanced expectations

The previous section showed examples of basic formulations of expected tool behavior, unconditional and referring to entire lines. Allowing a complete test suite for the set of criteria that was targeted, however, required the development of a number of advanced capabilities.

The most immediate need was for precise source locations in xp-violation-notes, to allow referencing a particular section of a line when distinct diagnostics could legitimately be expected for different items on this line.

When assessing MC/DC, for example, the tool diagnostics refer to specific operands within a Boolean expression (conditions within decisions in DO-178C terms) and most coding standards allow Boolean expressions with multiple operands on the same line. Testers must be able to specify the particular condition on a line for which a coverage diagnostic is expected. Similar needs occur with other criteria as well, for example, with Statement Coverage when multiple statements share the same source line, or with Decision Coverage when nested decisions are involved in an expression.

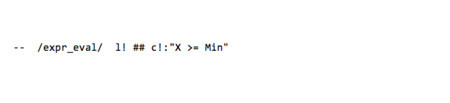

This is supported through extensions with the form :”line-excerpt” at the end of violation designators, as in the following sample expectation line for a hypothetical test of an MC/DC assessment over the example In_Range function. c!: “X >= Min” states that we expect an incomplete condition coverage diagnostic (c!) designating the “X >= Min” section of the line, which is just the first operand of the decision (Figure 5).

[Figure 5 | The sample expectation line here depicts a hypothetical test over the In_Range function example used previously, where c!: “X >= Min” states the expectation of an incomplete condition coverage diagnostic over the “X >+ Min” section of the line.]

[Figure 5 | The sample expectation line here depicts a hypothetical test over the In_Range function example used previously, where c!: “X >= Min” states the expectation of an incomplete condition coverage diagnostic over the “X >+ Min” section of the line.]

A few other facilities were introduced to support, for example, cases where a single statement spans multiple lines, where expectations vary for different versions of the tool or the compilation toolchain, or the use of a common driver for the assessment of several coverage criteria. The exact details are beyond the scope of this article.

Execution model overview

The general execution model underlying the tool testing framework consists of reasoning about sets of coverage result indications, referred to as coverage notes. Four kinds of coverage note objects are handled by combining two independent aspects: the note origin and the kind of output format a note applies to.

Regarding the note origin, the following are distinguished:

- Expected notes, coming from a statement of expected result, and

- emitted notes, found in reports produced by the tool.

Regarding the kind of output format, the following are defined:

- Line notes, for coverage indication characters in an annotated source, and

- violation notes, for violation messages found in a coverage text report.

The xp-source-line-note in a /tag/ line spec is then internally modeled as an expected line note object. The xp-violation-notes are modeled as expected violation note objects, and emitted line or emitted violation note objects are extracted from the coverage reports produced by the tool.

Essentially, the test suite engine performs the following steps for each test:

- Parse the test sources to construct sets of expected line and violation notes, one set of each per functional source. The engine matches /tag/ specifications in driver sources with tagged lines in the functional sources and instantiates individual note objects with a specific kind and source location information.

- Build the executable from the sources, execute it, and run the coverage analysis tool for the desired criterion, producing coverage reports.

- Parse the reports to construct sets of emitted line and violation notes.

- Match the expected line/violation notes against the emitted ones and report differences. A test passes when the tool has reported all the expected coverage indications against the assessed criterion and there is an expectation for all the coverage deviations reported by the tool.

Main characteristics of the scheme

An important characteristic of the scheme is to place the coverage result expectations for a test literally within the sources that drive how the functional code is exercised, and hence which pieces are covered and to what degree. This makes it convenient to verify consistency between what the test code does and the corresponding expected coverage results are, and provides a straightforward mechanism to document the connections between the two through comments in the source.

Another key aspect is the development of a specialized syntax to describe expectations, encouraging test writers to actively think about the expected results. This departs from methods using comparisons with baseline outputs, where baselines are typically obtained by generating outputs with the tool and verifying that the outputs are correct. There is no way to generate the specifications of expected results within this framework.

Similar ideas are used in some variants of test suites based on the DejaGNU framework (www.gnu.org/software/dejagnu), such as the one used by the GCC project (gcc.gnu.org).

The approach is also interesting for long-term maintenance of test suites. First, any changes in the report formats are handled by adjustments to the test suite execution engine, which are very localized and well controlled. This differs from baseline-oriented frameworks, where changes to the report formats usually incur adjustments to the full test baseline, which becomes tedious and error prone when the test base grows large. Second, test source maintenance is also easier since the coverage expectations are entirely disconnected from the designated lines’ relative positions in sources. Comments may be added or subprograms reordered, for example, without needing to update the expected results.

The main shortcoming of the framework is specialization. It is currently tailored for coverage analysis tools, and the code supports just the tool for which the environment was originally developed. Nevertheless, generalization is possible in a number of directions. Support has been developed for the C language, for example, and could be added for other languages based on customer demand. The framework could also be adapted to other coverage analysis tools when the tool features a command line interface. There is no fundamental limitation in this area.

Abstraction capabilities

The tags scheme, which allows designating sets of lines, provides greater abstraction than a mere individual line naming facility where each specific line would need to be matched mechanically by an expectation. Actually, the tag in /tag/ lines is interpreted as a regular expression, so there are powerful ways to construct elaborate line set patterns and a careful selection of tags can help simplify the expression of expected results significantly. In a way, devising a set of tags for a test can be perceived as defining a very basic micro language for designating source line sets, and from this perspective the tags scheme offers a kind of meta language that gets instantiated for every test.

Another level of factorization is allowed by the capability to share sources between tests, and in particular to have a common set of driver sources for different implementations of a functional idiom.

As an example, consider the goal of testing proper behavior of the tool on a Boolean expression like “A and then B” in Ada. A natural starting point is the simplest case where A and B are simple Boolean variables, with functional code such as that shown in Figure 6.

[Figure 6 | Shown here is an example test of the structural coverage analysis tool’s behavior using the simple Boolean expression “A and then B” in the Ada language.]

[Figure 6 | Shown here is an example test of the structural coverage analysis tool’s behavior using the simple Boolean expression “A and then B” in the Ada language.]

To exercise the code in Figure 6 a few drivers could be written that call the Eval_Andthen procedure directly in different manners, once or multiple times, passing different values to A and B and stating the expected results accordingly.

It is realized that additional tests would be of interest for functional code with operands more complex than elementary Boolean variables for instance. If such tests are written as independent entities, starting from the basic case as a model, it is almost immediately apparent that the set of driver sources needed is extremely similar to the one that was first written; just call in the functional code differently and have identical coverage expectations.

Instead, an environment can be set up where each set of tests for a kind of operand provides a helper API that the driver code can always use in the same fashion, regardless of the actual operand kinds. The driver code in Figure 7 provides an example, where FUAND stands for “Functional And”. The helper package is expected to provide an “Eval_TT_T” subprogram that calls into the functional code, arranging for both operands to evaluate True (_TT_) so the decision evaluates True as well (trailing _T):

[Figure 7 | The example driver code displayed here utilizes a helper API that can always be used in the same way regardless of the type of operand, where the “Eval_TT_T” subprogram calls into functional code to initiate evaluation of True (_TT_) and (_T) by both operands.]

[Figure 7 | The example driver code displayed here utilizes a helper API that can always be used in the same way regardless of the type of operand, where the “Eval_TT_T” subprogram calls into functional code to initiate evaluation of True (_TT_) and (_T) by both operands.]

Adding tests for a new combination of operand kinds then just requires providing the functional code and helper package, and the addition of a driver source automatically benefits all the operand kind variants already in place. This is a pretty powerful mechanism, which can even be generalized further to support coverage assessments for decisions in general contexts, not only as controlling expressions in if-statements.

Summary and perspectives

As part of the development of a framework for in-house testing of a coverage analysis tool, we have devised an approach where the expectations on coverage results are expressed as special comments within the test sources. A few important aspects of these scheme have been outlined here. The framework described encourages active thinking about what the expected results should be for each test, and offers abstraction facilities that allow the factorization of development and maintenance efforts.

The approach described served as the basis of our GNATcoverage tool qualification for several industrial projects that used the tool as part of DO-178B and DO-178C certifications in the avionics domain, up to the most stringent certification level, which requires MC/DC. Based on this work we are evaluating possible ways to formalize aspects of our testing strategies for coverage analysis issues, in particular regarding the implications of proper MC/DC testing with respect to expression topologies, expression context, and the kind and complexity of operands.

AdaCore

LinkedIn: www.linkedin.com/company-beta/39996

GitHub: github.com/AdaCore

References:

1. RTCA. (2011, December 13). RTCA DO-178C/EUROCAE ED-12C, Software Considerations in Airborne Systems and Equipment Certification. RTCA.

2. Kelly J. Hayhurst, D. S. (2001, May 1). A Practical Tutorial on Modified Condition / Decision Coverage. NASA.