Automotive Safety Through Multi-Modal Sensing

March 06, 2024

Blog

Motor vehicle crashes are a leading cause of death in many countries. In the United States alone, crashes kill over 100 people a day. Road deaths spiked during the pandemic in particular, and while they have since slowly declined, as U.S. Transportation Secretary Pete Buttigieg notes, “we still have a long way to go.”

To reduce the number of accidents, injuries, and fatalities, regulators and vehicle original equipment manufacturers (OEMs) aim to bring uncrashable cars to market. However, to achieve the vision of uncrashable cars, OEMs must first overcome a variety of technological and commercial challenges.

Interest grows in Advanced Driver Assistance Systems

In modern vehicles, driver safety is enhanced with Advanced Driver Assistance Systems (ADAS)—including blind spot detection (BSD), automatic emergency braking (AEB), lane departure warnings (LDW), and forward collision warnings (FCW). These systems use a variety of built-in sensors to detect and respond to the surrounding environment.

Regulatory and consumer interest in ADAS applications is increasing rapidly, fueled by the desire to protect drivers and other road users, and reduce accidents and deaths. On the regulatory front, governments are increasingly mandating safety features in new vehicles, which are enabled by ADAS. For example, the U.S. Department of Transportation’s National Highway Traffic Safety Administration (NHTSA) has recently announced several ADAS-related vehicle safety initiatives, including proposed rules to require seat belt warning systems, AEB, and pedestrian AEB (PAEB) in select vehicles.

In the European Union, a global leader in automotive safety regulation, the Vehicle General Safety Regulation introduced in 2022, now also mandates ADAS applications including intelligent speed assistance, reverse detection of vulnerable road users such as pedestrians and cyclists with driver distraction detection and warning. The legislation also establishes a legal framework for approval of automated and fully driverless vehicles.

OEMs in the U.S. may also benefit from recent legislation such as the Bipartisan Infrastructure Law that invests billions in roadway safety measures.

In addition to regulation, international new car assessment programs (NCAP) are raising the safety bar by adding safety tests that can only be passed by the inclusion of ADAS systems to achieve the coveted “5 star” ratings desired by consumers.

But while driver and road user safety adoption will be driven in part by safety regulations, NCAPs, and improved Consumer awareness, ultimately innovation will be the driving force - specifically, developing and implementing new and more efficient sensor and processing technologies.

Advantages of multiple sensor modalities

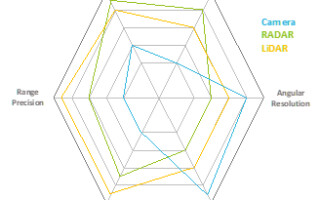

Sensing functions can be implemented by different sensor modalities, including cameras, radar, and LiDAR (light detection and ranging) to gather data about the vehicle’s environment. Since each sensor modality has unique benefits but also shortfalls, the ultimate vision of an uncrashable car will need to leverage multiple sensor modalities in conjunction.

Cameras, or vision sensors, are one of the most widely used vehicle sensors today. In combination with perception algorithms, automotive cameras can emulate human vision in their ability to distinguish between different shapes, colors, types, and categories of objects, and so are widely used for surround and back-up viewing, AEB, PAEB, LDW, and lane change assistance (LCA). However, as with human vision, camera vision is easily impaired by challenging environmental conditions such as low light, fog, and rain. Because of these challenges, OEMs typically complement camera-based ADAS sensing with radar sensors.

Radar sensors transmit radio waves in a targeted direction (often using beam-steering techniques for added directionality), and analyze the reflected signals to identify the location, speed, and direction of an object. Unlike cameras, radar is less affected by adverse weather conditions, and so is widely deployed for FCW, AEB and BSD. While radar is a versatile all-round sensor for all weather use, its resolution is limited to around 4mm for a 77GHz system. For higher precision, LiDAR sensing is now being considered and deployed as an additional ADAS sensor by OEMs.

LiDAR is a relatively new and advanced sensor modality that uses infra-red light instead of radio waves to calculate the distance to the object being detected. The use of infra-red light means that LiDAR has a potential resolution on the order of 2500x finer than a typical radar system. Coherent detection (of which frequency modulated continuous wave – or FMCW - is the typical implementation) enables per-point velocity determination, enabling additional information to downstream perception algorithms.

Like radar, LiDAR is less affected by weather conditions than cameras (and coherent detection additionally exhibits good interference immunity), but it also requires significant signal processing to perform its tasks, demanding innovation to limit power, size, and cost.

Because LiDAR offers better resolution, range, and depth perception than cameras and radar, it is becoming a key focus technology for OEMs for long range object detection (such as fallen cargo on a highway) and short range, high-precision detection (such as pedestrians in back-up scenarios). In addition to ADAS, due to its enhanced capabilities, LiDAR is also a key sensor modality for higher levels of driver automation and autonomous driving.

.png)

Figure 1. A representation of sensor modality capabilities in ADAS

Automakers typically deploy multiple modalities to maximize ADAS capabilities. Deploying more sensors for ADAS leads to a corresponding increase in the volume of data to be transported and processed, and that leads to escalating demand for expensive cabling and power-hungry computational power in vehicles—yet another challenge that must be overcome on the road toward uncrashable cars.

The future is in sensor fusion

Uncrashable cars are an aspirational vision for the industry and may not be realizable for the foreseeable future. But the industry needs to continuously strive for this goal due to the ultimate societal benefits – lives are literally at stake. OEMs are increasingly integrating a wide variety of sensor modalities to enhance drivers’ environmental perception and vehicle safety responses. And zone-based distributed processing architectures - leveraging sensor fusion - will help to balance the competing requirements of deploying more ADAS sensors and maintaining power-efficient system processing and minimizing OEMs’ overall bill of materials.

Bringing camera, radar and LiDAR modalities together is pushing the automotive industry forward and accelerating the pace of development of safer vehicles across the globe to benefit road users everywhere.