Enhancing Android App Video Features with HDR Support

September 27, 2023

Sponsored Story

As always, the world is moving towards more modern mobile computing devices designed to support high-quality media capabilities such as high dynamic range (HDR) within Android devices. While still used universally, SDR is not as equipped to support a broader color spectrum, brighter highlights, and deeper shadows.

To ease the transition from SDR to HDR, Android 13 introduced a requirement that devices equipped with 10-bit YUV capability must also support SDR. On the HDR side, their documentation states: “HLG10 is the baseline HDR standard that device makers must support on cameras with 10-bit.”, thus HLG10 (Hybrid Log-Gamma 10) is the base HDR format for capturing HDR content. Applications have the option to incorporate additional HDR standards such as HDR10, HDR10+, and Dolby Vision 8.4.

For Android app developers interested in integrating HDR support into their camera-to-end-user pipeline, it's a good idea to become familiar with the Camera2 API package within the Android API. The API enables low-level access to device-specific functions and, despite necessitating the management of device-specific configurations, supports complex use cases.

It's important to grasp several key terms that you'll encounter when implementing a standard camera-to-end-user pipeline before diving into Camera2. This includes capture, edit, encode, transcode, and decode.

In capture mode, the device retrieves data from the onboard camera sensor(s). Edit refers to processing raw data as either HDR or SDR at the codec level. Encode and decode are the compressing and decompressing of raw data. And transcode means decompressing the video, then re-encoding it, potentially in different codec formats or from HDR to SDR mode.

HLG Support Confirmation

First, a developer must confirm the device's support for HLG. Android's Camera2 API simplifies this process with its straightforward interface. Begin by checking for the presence of a 10-bit camera using the following code snippet:

Next, set up a CameraCaptureSession for a CameraDevice to capture video. A CameraCaptureSession abstracts the image-capture process from a camera to one or more target surfaces. The code example below is from Android’s HDR Video Capture topic. It demonstrates how to create a CameraCaptureSession based on the OS version:

Note that a preview stream and its shared streams require a low-latency profile, but this is optional for video streams. An application can determine whether there’s an extra look-ahead delay for any of the HDR modes by invoking isExtraLatencyPresent() (passing in DynamicRangeProfiles.HDR10_PLUS, DynamicRangeProfiles.HDR10, and DynamicRangeProfiles.HLG) before invoking setDynamicRangeProfile().

The session object can then be used for both preview and recordings. This code demonstrates how to initiate a preview by invoking a repeating CaptureRequest:

session.setRepeatingRequest(previewRequest, null, cameraHandler)

It’s also important to note that A) cameraHandler is the thread handler on which the listener should be invoked (or can be set to null to use the current thread), and B) applications using different HDR profiles for preview and video must verify valid profile combinations using getProfileCaptureRequestConstraints().

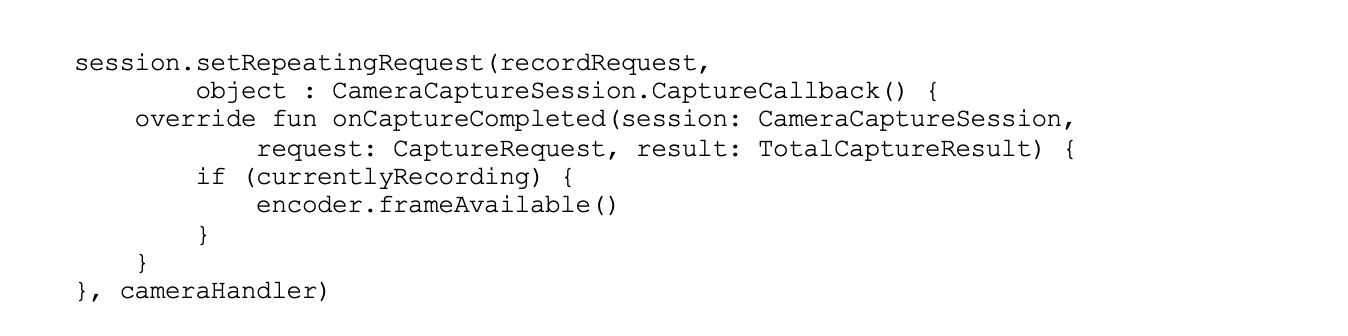

A continuous stream of frames can be maintained when you deploy a repeating CaptureRequest, which eliminates the need for continuous frame-by-frame capture requests. The first parameter is a CaptureRequest that contains the information required for the capture, such as capture hardware, output buffer, target surface(s), and more. Comparably, initiating a recording involves starting a repeating request. The code below shows this request with a CaptureCallback for tracking the capture progress (e.g., started, stopped, etc.):

The MediaCodec class is employed for video editing and you invoke the getCapabilitiesForType() method to determine if the device supports HDR video editing. If supported, it returns a MediaCodecInfo.CodecCapabilities object. Subsequently, call the isFeatureSupported() method on this object, passing in the FEATURE_HdrEditing string. If the method returns true, the device supports YUV and RGB input. In such cases, the encoder transforms and tone-maps RGBA1010102 to encode-able YUV P010. For instance, if a user records an HDR video in HLG, they can downscale, rotate, add overlays, and save it in HDR format. The TransformationRequest.Builder class's experimental_setEnableHdrEditing() method aids in constructing a transformation for HDR editing.

Transcoding High To Standard

There may be a need to support the transcoding of HDR content into SDR to facilitate content sharing across diverse devices or exporting video to different formats. Snapdragon® mobile platform offers an optimized pipeline that minimizes latency during transformation and can tone-map various HDR formats, including HLG10, HDR10, HDR10+, and Dolby Vision (on licensed devices).

Executing the Codec.DecoderFactory interface and working with the Media API enables the transcoding. To do this, you create a MediaFormat object for your video's MIME type and provide MediaFormat.KEY_COLOR_TRANSFER_REQUEST to the setInteger() method of the object, along with the MediaFormat.COLOR_TRANSFER_SDR_VIDEO flag.

The code snippet below illustrates an implementation of the interface's createForVideoDecoding() method. This implementation configures a codec capable of tone-mapping raw video frames to match the requested transfer, if specified by the caller:

Camera2 aids Android app developers looking to incorporate HDR support, providing device query capabilities at runtime, as well as optional code paths to fully leverage HDR on supported devices, such as those powered by Snapdragon mobile platforms.

Check out the new Android on Snapdragon developer portal for learning resources this and other API Resources for your Android development.

Snapdragon branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries.